12 min to read

Ship AI Tools Like Apps with Docker's MCP ToolKit

No more Conflicts!

Ship AI Tools Like Apps: Docker’s MCP ToolKit

The AI revolution is here, and every company wants their services to work seamlessly with large language models (LLMs). Wait a minute, I do recall a quote from “Ajeet’s talk “ is now every company an AI company?” and we all know the answer. Now the Model Context Protocol (MCP) – Anthropic’s open standard that’s rapidly becoming the bridge between LLMs and real-world applications. With Windows Copilot, Google Gemini, OpenAI’s ChatGPT, and Claude all embracing MCP, the message is clear: if you want LLMs to use your service, you need an MCP server.

But here’s where many companies are getting it wrong. In the rush to make their services AI-ready, they’re taking the seemingly obvious shortcut: auto-generating MCP servers from their existing OpenAPI schemas. It’s fast, it’s easy, and it technically works. But as David Gomes from Neon recently explained in his presentation, this approach misses the mark entirely.

What Exactly is MCP?

Before diving into why auto-generation fails, let’s establish what MCP actually does. Think of MCP as a universal translator between LLMs and your applications. It standardizes how LLMs can:

- Access real-world services and data

- Perform actions on behalf of users

- Understand what tools are available to them

An MCP server exposes three main components to LLMs:

- Tools (the most important): Actions the LLM can perform

- Resources: Data sources the LLM can access

- Prompts: Pre-defined conversation starters

The tools are where the magic happens. These are the actions that transform a conversational AI from a chatbot into a capable assistant that can actually get things done in your application.

The Auto-Generation Trap

Here’s the seductive logic that’s leading companies astray: “We already have an API with detailed OpenAPI documentation. Why not just convert that into an MCP server automatically?”

Several services have emerged to do exactly this – Stainless, Speakeasy, Mintlify, and others can generate an MCP server from your API schema in under a minute. The appeal is obvious: instant AI integration with zero custom development.

But this approach fundamentally misunderstands how LLMs work and what they need to be effective.

The Evolution: From APIs to AI-Native MCP Design

To better understand why auto-generation fails, let’s examine how different approaches to MCP server creation stack up:

Design Philosophy Comparison

| Aspect | Traditional API | Auto-Generated MCP | Purpose-Built MCP |

|---|---|---|---|

| Primary User | Human developers | LLMs (by proxy) | LLMs (by design) |

| Tool Count | 50-100+ endpoints | 50-100+ tools (1:1 mapping) | 5-15 focused tools |

| Descriptions | Technical documentation | Direct API description copy | LLM-optimized with examples |

| Abstraction Level | Resource management | Resource management | Task-oriented goals |

| Workflow Support | Single operations | Single operations | Multi-step processes |

| Testing Strategy | Unit/integration tests | Basic functionality tests | AI behavior evaluations (evals) |

| Security Model | Authentication/authorization | Authentication/authorization | Container isolation + auth |

Real-World Example: Database Operations

Let’s see how the same database functionality would be exposed across different approaches:

| Operation | Traditional API | Auto-Generated MCP | Purpose-Built MCP |

|---|---|---|---|

| Create Database | POST /databases |

create_database tool |

create_database tool |

| Run Migration | POST /databases/{id}/query |

execute_sql tool |

prepare_database_migration + complete_database_migration |

| Backup Database | POST /databases/{id}/backup |

create_backup tool |

create_safe_backup_with_verification |

| Monitor Performance | GET /databases/{id}/metrics |

get_database_metrics tool |

analyze_performance_and_recommend_optimizations |

User Experience Impact

Traditional API Experience:

User Request: "Set up a database for my todo app"

├── Developer writes code to call create_database

├── Developer writes migration scripts

├── Developer configures connection strings

├── Developer sets up monitoring

└── Result: Working database (requires technical expertise)

Auto-Generated MCP Experience:

User Request: "Set up a database for my todo app"

├── LLM calls create_database tool

├── LLM struggles with configuration options

├── LLM may choose wrong migration approach

├── LLM lacks context for best practices

└── Result: Functional but potentially problematic setup

Purpose-Built MCP Experience:

User Request: "Set up a database for my todo app"

├── LLM calls optimized create_database tool with smart defaults

├── LLM uses guided migration workflow with safety checks

├── LLM follows built-in best practices

├── LLM receives context-aware recommendations

└── Result: Production-ready database with best practices applied

Four Critical Problems with Auto-Generated MCP Servers

1. Choice Paralysis: Too Many Tools Confuse LLMs

Most APIs are comprehensive by design. Neon’s API, for example, has 75-100 different endpoints covering every possible operation. But here’s what many don’t realize: LLMs perform terribly when given too many options.

Despite the hype around million-token context windows, LLMs actually work better with focused, limited contexts. When presented with dozens of tools, they become indecisive and often choose incorrectly. It’s like asking someone to pick a restaurant from a phone book – more options create more confusion, not better decisions.

2. API Documentation Wasn’t Written for AI

Your existing API documentation was written for human developers who can Google unfamiliar concepts, read between the lines, and apply common sense. LLMs need something entirely different:

- Direct, explicit language: No assuming background knowledge

- Rich examples: LLMs learn patterns better with concrete examples

- Context about when to use each tool: Not just what it does, but why and when

Neon solved this by rewriting their tool descriptions in XML format, providing extensive context about each tool’s purpose and appropriate usage scenarios. This isn’t just a nice-to-have – it’s essential for reliable LLM performance.

3. APIs vs. Tasks: Different Mental Models

Traditional APIs are designed for automation and low-level resource management. They’re built with developers in mind who want granular control over system resources. But LLMs think more like humans – they want to accomplish high-level goals and tasks.

An LLM doesn’t care about the intricacies of database connection pooling or memory allocation. It wants to “create a to-do app with user authentication” or “analyze sales data from last quarter.” The abstraction levels are completely different.

4. Missing the Innovation Opportunity

Perhaps most importantly, auto-generation prevents you from creating genuinely useful AI-native workflows. When you simply expose existing API endpoints, you’re limited to what those endpoints can do individually.

Consider Neon’s approach to database migrations. They could have exposed a generic “Run SQL” tool and called it done. Instead, they created a sophisticated two-step workflow:

- “Prepare Database Migration”: Stages the migration on a temporary branch

- “Complete Database Migration”: Applies the migration after testing

This workflow includes built-in safety measures that guide the LLM through testing before committing changes. It’s something that makes perfect sense for AI but would be overly complex for a traditional API endpoint.

Building MCP Servers the Right Way

So what should you do instead? Here’s a practical approach:

Start with Strategy, Not Generation

Begin by asking: “What would an AI assistant want to accomplish with our service?” Don’t start with your existing API – start with user goals and work backwards.

The Hybrid Approach

If you want to leverage existing work, consider this process:

| Step | Action | Goal |

|---|---|---|

| 1 | Auto-generate from API schema | Create initial foundation |

| 2 | Ruthlessly cut unnecessary tools | Aim for 5-15 essential actions |

| 3 | Rewrite descriptions for LLMs | Add examples and explicit context |

| 4 | Design purpose-built tools | Create AI-native workflows |

| 5 | Build comprehensive tests | Ensure reliability through evals |

Focus on Testing and Iteration

LLMs are non-deterministic, so you need to test extensively. Run your evaluations hundreds or thousands of times to ensure reliability. Think of it as load testing, but for AI behavior instead of server performance.

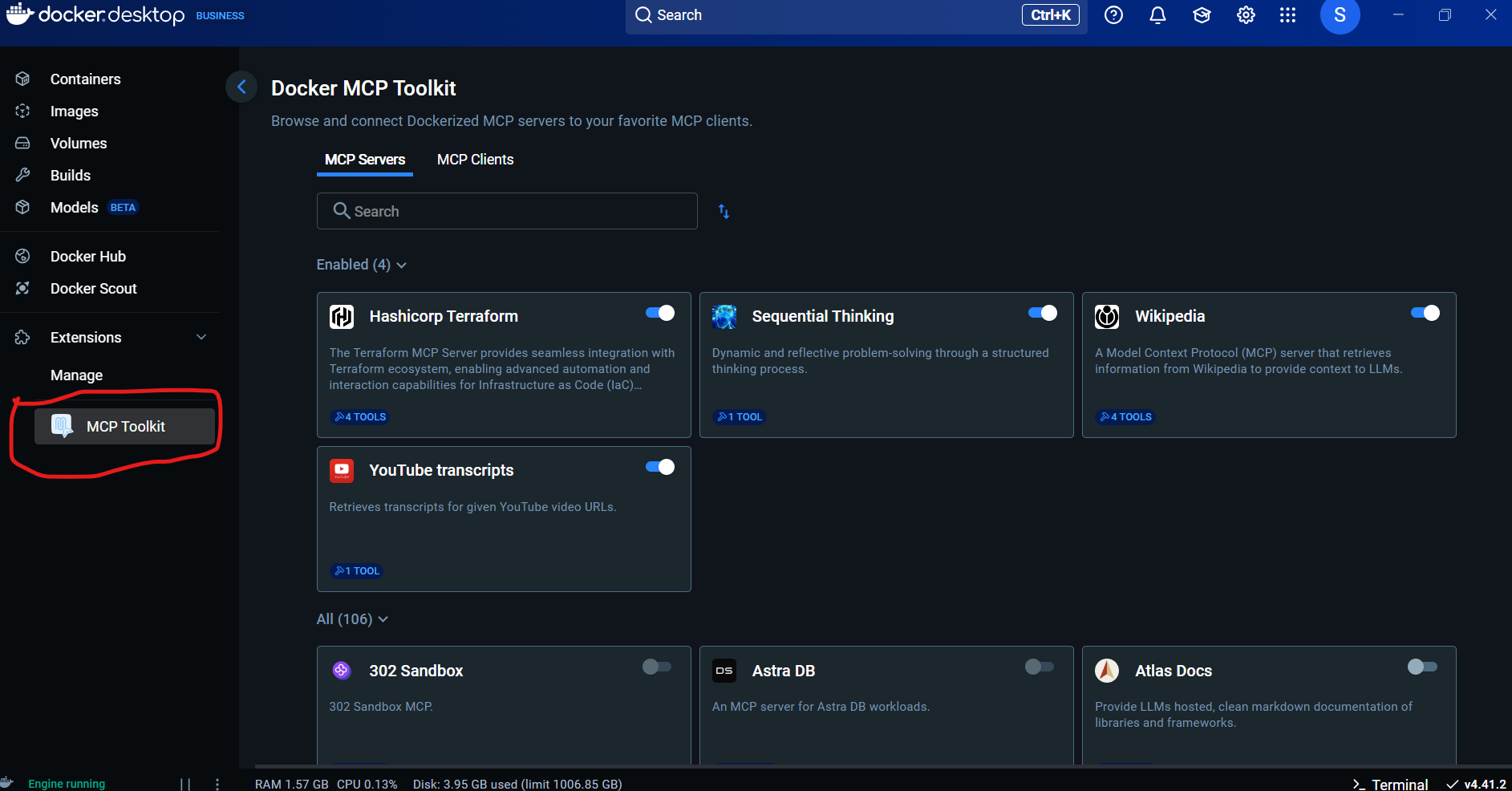

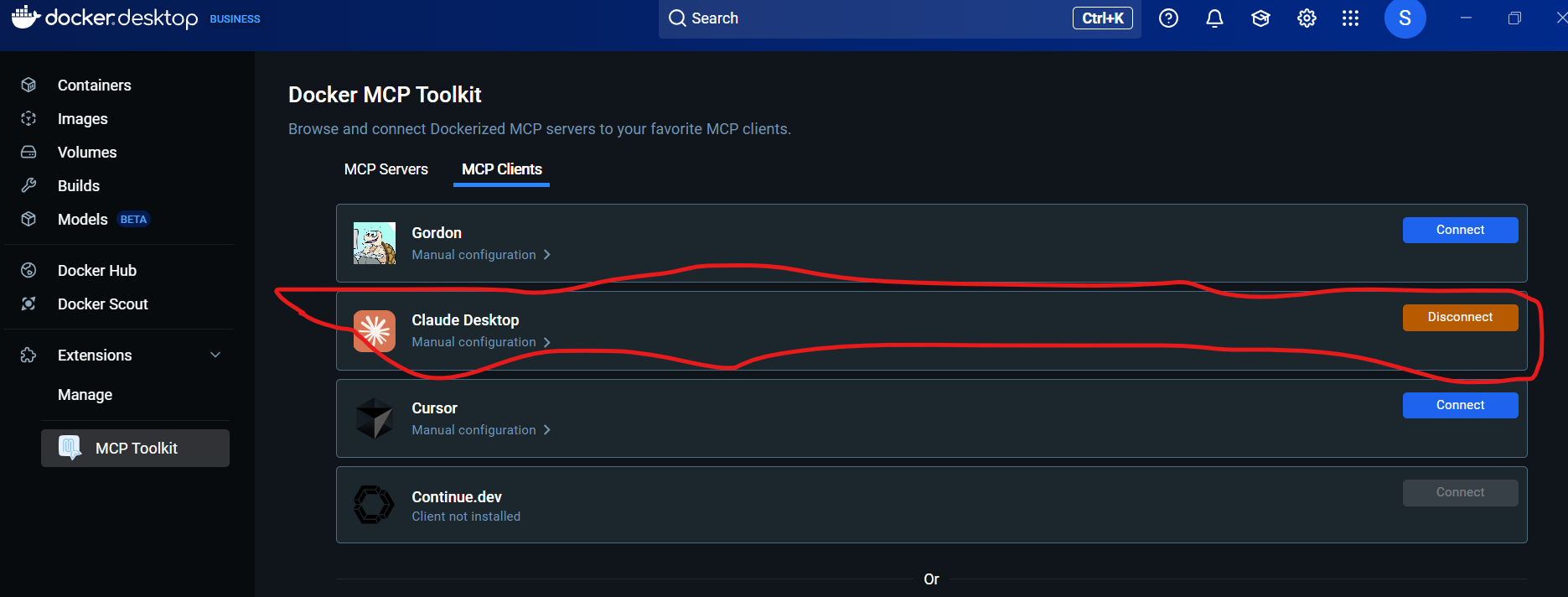

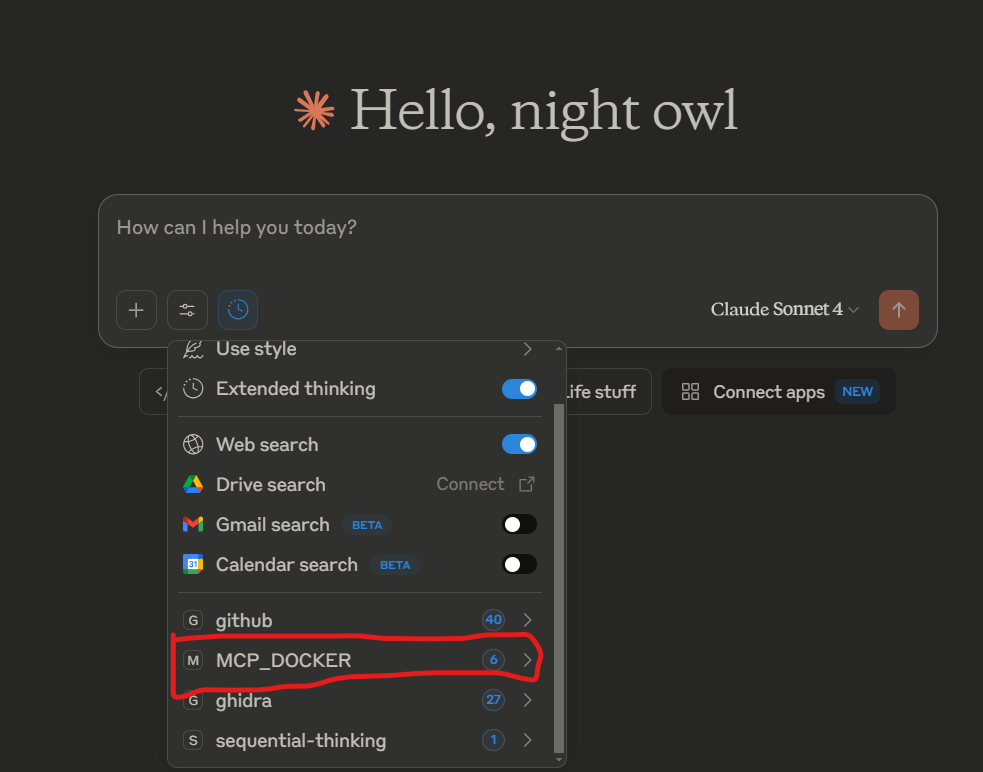

How Docker MCP Toolkit Revolutionizes MCP Development

As per official Documentation Docker MCP toolKIT is a Docker Extension allowing to connect dockerized MCP servers to MCP Clients.

Docker ToolKit with Claude MCP Client

While the principles above guide good MCP server design, there’s still the practical challenge of building, deploying, and managing these servers securely. This is where Docker’s recently launched MCP Catalog and Toolkit comes in, addressing the production-readiness gap that has been holding back MCP adoption.

The Production Readiness Problem

Traditional MCP server development faces several critical challenges:

Traditional MCP Development Challenges

├── Environment Conflicts

│ ├── Version conflicts between dependencies

│ └── "Dependency hell" across different projects

├── Complex Setup

│ ├── Manual configuration required

│ └── Platform inconsistencies (Windows/Mac/Linux)

├── Security Vulnerabilities

│ ├── Full host system access

│ └── Plaintext credential exposure

└── Discovery Fragmentation

├── Searching Discord threads

└── Hunting through Twitter replies

Docker MCP Toolkit Architecture

Docker’s MCP Toolkit solves these problems by bringing the same containerization benefits that revolutionized application deployment to the MCP ecosystem:

┌─────────────────────────────────────────────────────────────────┐

│ Docker MCP Ecosystem │

│ │

│ ┌─────────────────┐ ┌──────────────────┐ ┌─────────────┐ │

│ │ Docker MCP │───▶│ Trusted MCP │───▶│ Docker │ │

│ │ Catalog │ │ Servers │ │ Desktop │ │

│ │ (Docker Hub) │ │ (100+ Verified) │ │ Extension │ │

│ └─────────────────┘ └──────────────────┘ └─────────────┘ │

│ │ │

│ ┌──────────────────┐ │ │

│ │ Gateway MCP │◄───────────┘ │

│ │ Server │ │

│ │ (Dynamic Routing)│ │

│ └─────────┬────────┘ │

└─────────────────────────────────────┼───────────────────────────┘

│

┌─────────────────▼─────────────────┐

│ MCP Clients │

│ Claude • Cursor • VS Code │

│ Windsurf • continue.dev │

└───────────────────────────────────┘

Security & Management Layers:

┌─────────────────┬─────────────────┬─────────────────┐

│ Container │ Credential │ CLI Management │

│ Isolation │ Management │ │

│ • Network │ • OAuth │ • docker mcp │

│ • Memory │ • Revocation │ • Build & Run │

│ • Disk │ • Integration │ • Lifecycle │

└─────────────────┴─────────────────┴─────────────────┘

Key Features and Benefits

| Feature | Traditional Setup | Docker MCP Toolkit |

|---|---|---|

| Discovery | Manual searching, fragmented sources | Centralized catalog with 100+ verified servers |

| Installation | Complex dependency management | One-click launch from Docker Desktop |

| Security | Host access, plaintext credentials | Built-in isolation, integrated OAuth management |

| Credential Management | Manual configuration files | Integrated with Docker Hub account |

| Cross-Platform | Platform-specific issues | Container consistency across environments |

| Updates | Manual version management | Automated container updates |

Addressing Critical Security Concerns

The Docker MCP Toolkit specifically addresses several emerging security threats in the MCP ecosystem:

Security Threat Comparison

| Threat Type | Traditional MCP | Docker MCP Toolkit |

|---|---|---|

| Tool Poisoning | Malicious instructions in tool descriptions | Verified publisher model, content scanning |

| Tool Rug Pulls | Legitimate servers compromised after adoption | Docker’s secure supply chain, vulnerability scanning |

| Environment Conflicts | Version conflicts, dependency issues | Complete container isolation |

| Credential Exposure | Plaintext environment variables | Integrated credential management with revocation |

Implementation Workflow

Here’s how the Docker MCP Toolkit transforms the development workflow:

Developer Catalog Desktop Container Client

│ │ │ │ │

│ Browse │ │ │ │

│ servers │ │ │ │

│────────▶│ │ │ │

│ │ │ │ │

│ One-click install │ │ │

│──────────────────▶│ │ │

│ │ │ Launch │ │

│ │ │ isolated │ │

│ │ │ server │ │

│ │ │─────────▶│ │

│ │ │ │ Register│

│ │ │ │ with │

│ │ │◄─────────│ Gateway │

│ │ │ │ │

│ │ │ │ │ Connect

│ │ │◄─────────────────────│ to Gateway

│ │ │ │ │

│ │ │ Route │ │

│ │ │ requests │ │

│ │ │─────────▶│ │

│ │ │ │ Return │

│ │ │ │responses│

│ │ │ │────────▶│

Enterprise-Ready Features

Docker is partnering with trusted names including Stripe, Elastic, Heroku, Pulumi, Grafana Labs, Kong Inc., Neo4j, and New Relic to build a secure ecosystem. Key enterprise features include:

- Registry Access Management: Control which MCP registries are accessible

- Image Access Management: Restrict which MCP servers can be installed

- Custom MCP Server Publishing: Organizations can publish private MCP servers

- Full Enterprise Controls: Comprehensive governance and compliance features

Getting Started with Docker MCP Toolkit

The toolkit includes several components that work together:

| Component | Purpose | Key Features |

|---|---|---|

| MCP Catalog | Discovery and trust | 100+ verified servers, trusted publishers |

| Desktop Extension | Management interface | One-click launch, credential management |

| Gateway Server | Dynamic routing | Exposes enabled tools to compatible clients |

| docker mcp CLI | Command-line management | Build, run, and manage MCP servers |

This comprehensive approach means that instead of spending time on infrastructure concerns, developers can focus on what matters: designing effective AI-native workflows and tools.

The Bigger Picture

MCP represents a fundamental shift in how we think about application interfaces. Just as mobile apps required different design patterns than desktop software, AI integrations require different patterns than human-facing APIs.

The companies that recognize this early and invest in thoughtfully designed MCP servers will have a significant advantage as AI assistants become more prevalent. Those that simply auto-generate from existing APIs will find their integrations are technically functional but practically frustrating.

Conclusion

The rise of MCP is creating an inflection point for how software services integrate with AI. While the temptation to auto-generate MCP servers from existing APIs is understandable, it’s a shortcut that leads to suboptimal results.

Building effective MCP servers requires understanding that LLMs are a fundamentally different type of client. They need focused choices, explicit guidance, task-oriented abstractions, and purpose-built workflows. Companies that embrace this reality and design accordingly will create AI integrations that truly delight users.

The question isn’t whether your service will support AI integration – it’s whether you’ll do it in a way that actually works well. Your API might be perfect for developers, but it’s probably not ready for AI. And that’s exactly why MCP exists.

Comments